Agentic AI

Feb 18, 2026

Akash Tanwar

IN this article

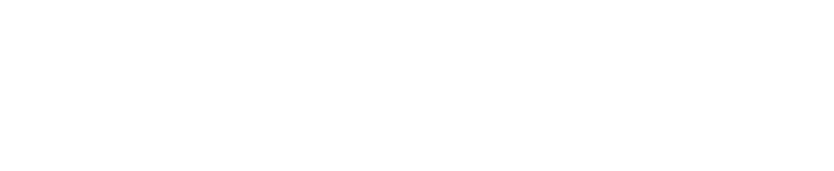

The Qualtrics 2026 Consumer Experience Trends Report, based on 20,000+ consumers across 14 countries, found that AI-powered customer service fails at nearly four times the rate of every other AI application. Nearly one in five consumers saw zero benefit from AI support interactions. Misuse of personal data is now the top consumer concern when companies deploy AI, and only 39% of people trust brands to handle their data responsibly. This post unpacks what those findings actually mean for B2C CX leaders, where the failure is coming from, and what a structured, auditable AI support agent looks like in practice.

What Qualtrics Found

In Q3 2025, Qualtrics XM Institute surveyed more than 20,000 consumers across 14 countries as part of their 2026 Consumer Experience Trends Report. The scope was broad: eighteen industries, every major economy, consumers across every age and income group.

The headline finding on AI was brutal.

Nearly one in five consumers who had used AI for customer service reported no benefit from the experience at all. That failure rate is almost four times higher than for AI use in general. For context, consumers ranked AI-powered customer support as the worst AI application for convenience, time savings, and usefulness across every category tested. Only "building an AI assistant" ranked lower.

Metric | Finding | Source |

|---|---|---|

Consumers reporting zero benefit from AI support | 1 in 5 | Qualtrics 2026 CX Report |

AI customer service failure rate vs. other AI uses | 4x higher | Qualtrics 2026 CX Report |

Consumers who fear misuse of personal data in AI | 53% (up 8 pts YoY) | Qualtrics 2026 CX Report |

Consumers who trust companies with their data | 39% | Qualtrics 2026 CX Report |

Consumers worried about losing human contact | 50% | Qualtrics 2026 CX Report |

Isabelle Zdatny, head of thought leadership at Qualtrics XM Institute, was direct in the report: "Too many companies are deploying AI to cut costs, not solve problems, and customers can tell the difference."

That single sentence captures the entire failure mode.

Why This Matters More in B2C Than B2B

The Qualtrics numbers represent averages across all industries. But for B2C brands handling high ticket volumes in fintech, e-commerce, and digital subscriptions, the failure cost is asymmetric.

In B2C, a broken support interaction is not just a service failure. It is a loyalty event.

Qualtrics found that consumers who choose a brand for great customer service report 92% satisfaction and 89% trust. Those who choose on price alone sit at 87% and 83%. That 5-point gap in trust compounds across hundreds of thousands of interactions per year.

Meanwhile, only 29% of consumers now share negative feedback directly with companies after a bad experience, an all-time low, down 7.5 points since 2021. Another 30% say nothing at all. That means when AI fails a customer today, you are almost certainly not hearing about it. You are just watching retention decay.

For B2C CX leaders operating at scale, the failure modes are specific:

A refund mishandled by an AI agent that fabricates confirmation leaves a customer disputing a charge they never received.

A subscription cancellation handled without policy enforcement exposes the brand to consumer rights violations.

An order inquiry that loses context after three turns leaves a customer with a delayed shipment and no answer, at exactly the moment they are deciding whether to reorder.

Each of these destroys the consumer relationship faster than no AI at all.

The Real Problem: Deployment Philosophy, Not Technology

The Qualtrics report lands on a diagnosis that aligns exactly with what the Salesforce CRMArena-Pro benchmark found earlier this year: the technology itself is not the primary failure. The deployment philosophy is.

Most AI support agents are deployed as cost-reduction tools. They are measured on deflection rate, not resolution rate. They are built to handle volume, not to complete tasks. And they are deployed without the memory, policy enforcement, or audit infrastructure to function reliably in regulated B2C workflows.

The result is a familiar pattern. The agent answers questions until the conversation gets complicated, then either loops, escalates poorly, or fabricates a response. The customer leaves the interaction worse off than if they had emailed a human, and they rarely complain directly, so the brand never connects the AI deployment to the churn signal weeks later.

Here are the specific failure modes driving the numbers:

Context Loss in Multi-Turn Conversations

Most AI agents reset context after a few turns. A customer verifying an account, asking about a charge, and then requesting a partial refund across four messages is a completely standard support flow. An agent without persistent memory treats each message as a new conversation. The interaction fails, but the deflection metric still records it as "handled."

Hallucinated Actions and False Confirmations

Without a deterministic execution layer, LLM-based agents will generate plausible-sounding confirmations for actions they did not perform. In fintech environments, this is not a quality issue. It is a compliance and fraud risk. A customer told their dispute was filed when it was not has legal standing in most markets.

Privacy and Data Exposure

The Qualtrics report found that 53% of consumers fear misuse of personal data in AI interactions, up 8 points year over year. This fear is well-founded. Agents without proper tokenization and output scrubbing routinely surface masked account numbers, internal record IDs, and escalation notes during standard conversations. In GDPR and PCI-regulated environments, this is a violation, not just a bad experience.

No Audit Trail

When a regulator asks why an AI agent told a customer they were eligible for a refund they were not owed, or why a cancellation was processed without a required notice period, a flat conversation log does not answer the question. Without SHA-256 hash-chained action logs, every AI-involved support incident becomes a liability.

What B2C CX Leaders Should Actually Be Asking Vendors

The Qualtrics findings establish a new bar. The question for 2026 is no longer "can your AI handle our FAQ volume?" The question is:

Does the agent maintain verified context across a full resolution lifecycle, not just a single session?

Can it execute policy-aware actions through typed API integrations, not just generate text about those actions?

Is every decision logged with a traceable audit chain that satisfies GDPR, PCI-DSS, and internal compliance review?

Can you cap inference costs and define hard fallback behaviors at the workflow level?

Can the system run within your VPC to maintain data sovereignty?

If the answer to any of those is no or "we're working on it," the agent is not enterprise-ready for B2C support at scale.

One Alternative: How Fini Is Built for This Environment

Fini was designed from the ground up for the exact environment the Qualtrics report describes. High volume. Regulated data. Customers who will not tell you when something goes wrong, but will quietly churn.

The architecture is different at a structural level.

Failure Mode | Generic AI Agents | Fini |

|---|---|---|

Context loss | Stateless per session | Persistent memory across multi-turn flows |

Action execution | Prompt-generated text | API-typed Action Graph with deterministic paths |

PII handling | Unscoped output | PCI-compliant tokenization via Stripe and VGS |

Data privacy | No output scrubbing | Masking enforced at the execution layer |

Audit logging | Flat logs or none | SHA-256 hash-chained action records |

Model cost control | Fixed premium LLMs | Dynamic routing with open-weight fallback for simple tasks |

Fini does not replace your support agents. It runs inside your existing Salesforce, Zendesk, or Intercom environment with scoped permissions and defined fallback paths. Your human agents handle what the AI cannot, with full context transferred automatically.

A Real-World Result: 600,000+ Tickets in Fintech

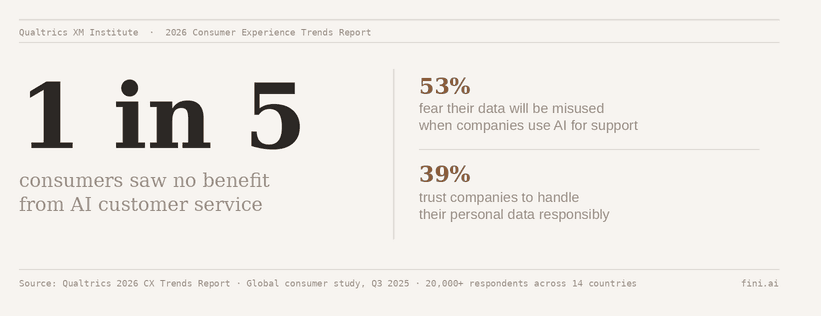

A US fintech used Fini to automate refund processing, plan change flows, and KYC verification across their support operation.

Metric | Before Fini | After Fini |

|---|---|---|

Multi-turn resolution rate | 34.8% | 91.2% |

Cost per resolved ticket | $2.35 | $0.70 |

CSAT (1-5 scale) | 3.1 | 4.4 |

Payback period | N/A | Under 90 days |

Agent workload | Baseline | 50% reduction |

The result on trust metrics was notable. Post-deployment sentiment data showed a 2x increase in positive phrases like "felt cared for" in follow-up surveys. Retargeting click-through rates on the same customers improved 17% in the quarter following deployment.

This aligns directly with what Qualtrics identified: customers who experience genuinely good service, not deflection masquerading as support, become the highest-trust, highest-loyalty cohort in your base.

What a Deterministic Refund Flow Looks Like

To make this concrete, here is what a structured cancel-and-refund workflow looks like in Fini's Action Graph:

No generative guesswork. No prompt chain that can hallucinate a confirmation. Every step is logged, policy-bounded, and auditable. This is what "resolving the issue" actually means, versus deflecting it.

The Bottom Line for 2026

The Qualtrics report is the second major third-party study this year, following Salesforce's CRMArena-Pro benchmark, to confirm the same structural problem: AI support as it is commonly deployed is not working, and customers are paying attention.

The companies that will build durable B2C loyalty in 2026 are the ones who stop measuring AI on deflection and start measuring it on verified resolution, trust scores, and audit compliance. That requires a different kind of architecture.

Fini deploys inside your existing stack in under 10 days. No replatforming. No hallucinations on critical workflows. And a per-ticket cost that runs a fraction of what generic LLM agents currently cost.

If you want to see what structured, auditable AI support looks like in practice, across Salesforce, Zendesk, or Intercom, book a 30-minute walkthrough. Real flows, real data, real resolutions.

Q1: What did the Qualtrics 2026 Consumer Experience Trends Report find about AI customer service? The study, which surveyed 20,000+ consumers across 14 countries, found that nearly one in five consumers saw no benefit from AI-powered customer service interactions. That failure rate is almost four times higher than AI use in other contexts.

Q2: Why is AI customer service failing at such a high rate? The primary cause is deployment philosophy. Most AI support tools are built to deflect volume, not to resolve issues. They lack persistent memory, deterministic action execution, and policy enforcement, which means they fail in any multi-step flow that requires context, secure action, or regulatory compliance.

Q3: What are consumers most concerned about when companies use AI for support? Misuse of personal data is the top concern, cited by 53% of consumers and rising 8 points year over year. Half of consumers also worry about losing the ability to reach a human agent.

Q4: How does this connect to churn? Qualtrics found that only 29% of consumers share negative feedback directly after bad experiences, an all-time low. Nearly half of bad AI support experiences lead to decreased spending, meaning brands are losing customers without receiving any direct signal about why.

Q5: What failure modes drive poor AI support outcomes in fintech and e-commerce? The main failure modes are context loss in multi-turn conversations, hallucinated action confirmations, unscoped PII exposure, and absent audit trails. All four create downstream compliance and trust exposure in regulated B2C environments.

Q6: What should CX leaders require from an AI support agent in 2026? Persistent conversation memory, verified API-based action execution, PCI and GDPR-compliant data handling, hash-chained audit logs, and the ability to deploy within your own cloud or VPC for data sovereignty.

Q7: How does Fini address the context loss problem? Fini maintains structured session state across multi-turn flows, not just prompt chaining. This means the agent retains verified customer context through a full refund, cancellation, or escalation lifecycle without losing information mid-conversation.

Q8: How does Fini handle PII in support interactions? Fini tokenizes sensitive fields via Stripe or VGS and enforces output scrubbing at the execution layer. Account numbers, IBANs, and internal record IDs are never surfaced in agent responses.

Q9: What is Fini's Action Graph? It is a typed, deterministic execution map that defines exactly what actions an agent can take, such as issuing refunds, verifying identity, or updating subscriptions, with every step logged and auditable. Actions are policy-bounded and cannot generate hallucinated confirmations.

Q10: What results has Fini delivered in fintech support? In one deployment covering 600,000+ tickets, Fini raised multi-turn resolution from 34.8% to 91.2%, reduced cost per ticket from $2.35 to $0.70, improved CSAT from 3.1 to 4.4, and delivered full payback in under 90 days.

Q11: How long does Fini take to deploy? Most enterprise teams go live within 5 to 10 business days using Fini's prebuilt templates for refund, escalation, and KYC flows. No replatforming of existing helpdesk infrastructure is required.

Q12: Does Fini replace human agents? No. Fini handles the 70 to 90 percent of repeatable, policy-bounded interactions and escalates complex or high-emotion cases to human agents with complete context intact, never a cold handoff.

Q13: Is Fini compliant with SOC 2 and GDPR? Yes. Fini is SOC 2 certified, deployable within your cloud or VPC, and designed to meet GDPR, PCI-DSS, and FinCEN requirements for regulated B2C support environments.

Q14: What integrations does Fini support? Fini integrates natively with Salesforce, Zendesk, and Intercom, and connects to payment infrastructure including Stripe and VGS for secure action execution.

Q15: Where can I see a live demo? Book a 30-minute walkthrough here. Fini will demonstrate live flows across your current helpdesk environment, covering refund handling, escalation paths, and PII masking in production.

GTM Lead